[dropcap]T[/dropcap]his year’s Consumer Electronics Show (CES) featured drones that follow their owners, lip-reading cars, robots that recognize your family members, and head-mounted VR games controlled with your gaze. By comparison, today’s “smart” phones feel as quaint as a pocket calculator.

In fact, smart and intelligent are almost meaningless terms. What computer isn’t somewhat “smart”? But five years after you buy it, it feels like an antique. Wouldn’t a genuinely smart device be more capable after five years of learning, practising, and growing – just like a person?

Any smart device worthy of the name should be able to figure out what I want with minimal input from me. It should sense my intent, perhaps even before I do. It wouldn’t read my mind, exactly, but it would get a feeling for how I think, just as my friends and colleagues do, based on what I tell them and what they see me doing. And, like a person who knows me well, smart systems would detect the intention or meaning behind my words, perhaps just from reading my facial expression or observing my body language.

Actually, computing systems increasingly are able to do that, and more. They can infer human intentions by using behavioral models and environmental cues. Until now, we’ve told the machines what to do. Soon, they will infer our intentions and proactively do what we want, without being told – almost as if they can read our minds.

If you could read my mind

To be clear, we’re not talking about a brain-computer interface, where electrodes are directly attached to our heads. Instead, systems will use external sensors such as cameras, microphones, and infrared sensors that pick up on clues which indicate our intentions. For example, head position and gaze are clear indications of what someone is paying attention to.

When a driver looks over her shoulder, it’s a signal that she may be planning to change lanes. Likewise, facial expression and tone of voice can tell an autonomous vehicle when the driver is angry, nervous, or just not paying attention to the road. Pupil dilation indicates surprise, while skin flush can mean embarrassment or the adrenalin rush of excitement.

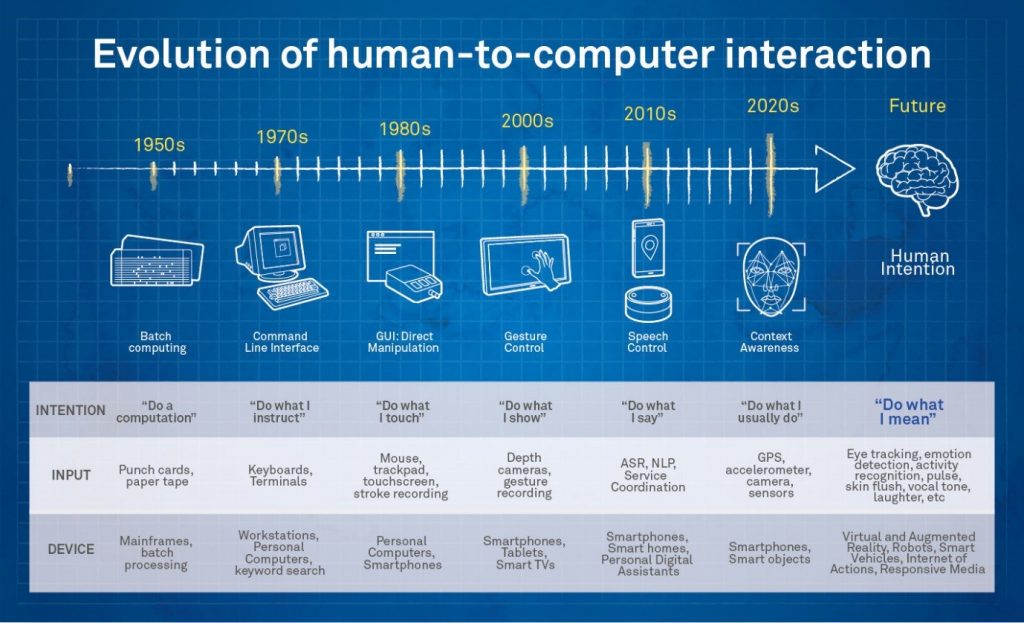

This follows a natural progression in Human-Computer Interaction (HCI), which is the science of telling computers what to do, in ways that are easier and more efficient for humans. In the early days, mainframes and mini-computers needed commands that were highly specific and pretty technical. Then came the PC era, with user-friendly interfaces like icons, buttons, and menus. More recently, we’ve started using gestures and speech.

Soon, our devices won’t have keyboards, touch screens, or other traditional interfaces. In fact, they won’t look like computers or phones at all. Instead, they will be semi- or fully autonomous devices, such as vehicles, drones, and robots. They could also be connected appliances, or head-mounted displays like VR/AR goggles.

When the objects in homes, offices, and factories are linked through the Internet of Things, you won’t need a touch-screen keypad to tell your fridge to check the food for spoilage. You won’t input directions on your car dashboard to get you home at the end of the day. The systems will know what to do.

SEE ALSO: Samsung gadget that enables devices to talk to each other

To some extent, they know already. For example, when your phone tells you to leave for an appointment and factors in traffic conditions. You needn’t tell the phone to allow extra time; if it’s rush hour, the phone makes the adjustment automatically. As more sensors come online, this trend will accelerate, not only because more data are being generated, but also because machine learning and user-experience designers will make sure that a device’s response more closely matches the intent of its user.

Scary? Maybe. But only because we don’t yet expect our (still comparatively stupid) smartphones to know what we’re thinking. You don’t get nervous if a human colleague suggests leaving a little time for traffic, right? These technologies will be no more invasive than a human observer. We may feel uneasy if it’s not immediately clear to us how a phone could possess the information it does.

But if systems are transparent about how they collect and use data, we will quickly grow accustomed to the proactive services delivered by intelligent machines.

In addition to inferring our intentions, sensors have a more straightforward application: animating avatars in virtual reality. Facebook recently demonstrated the use of avatars in VR for socializing and collaborating with people in remote locations. Think of this as telling the computer to “do what I am.” Today those avatars are animated by head and hand position, along with facial expressions like surprise. Imagine the future of social computing when avatars can read fine-grained eye tracking, skin flush, body language, and subtle facial expressions.

Who’s in control?

What does all of this augur for the future as sensing technologies become more accurate, less expensive, more pervasive, and better able to read detailed clues of human intention?

Humans read these clues all the time when they interact with each other. Now the machines will get in on the game.

Humans read these clues all the time when they interact with each other. Now the machines will get in on the game.

For safety’s sake, devices should, in many cases, confirm that they have understood our intent correctly. That means they have to get our attention, politely but effectively. Systems will need to be considerate: Is the user interacting with other people? What is their emotional state? Ideally, devices should not interrupt people who are speaking, or jolt users with alarming notifications, or distract them with advertisements and other unwanted input.

In the distant future, after years of observing human activity patterns, artificial intelligence may produce computers that can realistically simulate the subtleties of human behavior. We might have digital replicas that stand in for us in virtual environments, acting on our behalf without our direct control.

That’s a long way off, and would require far more advanced intelligence than what’s needed for the famous Turing test, in which judges distinguish between human and computer responses to a series of questions.

NEXT READ: Kenyan bank launches video-chat service for customers

But the real touchstone of intention detection isn’t a synthetic doppelgänger so authentic that one’s closest friends can’t recognize it as such. The ultimate test occurs when other humans do not mind that they’re interacting with a simulacrum. In some ways, they might even prefer it to the original.

Until then, the paradigm of human-computer interaction will keep shifting. Computers are taking greater control of tasks, while requiring less input. That will make our lives better— as long as we make sure the machines get it right.

The author is the Vice President and Global Head of Huawei Technologies’ Media Lab focusing on the creation of next generation digital media experiences. This article first appeared in the Huffington Post

[crp]

Leave a comment